Can We Stop AI from Destroying the Future?

ChatGPT, Grok, Midjourney, Google Veo, and the other AI tools out there currently aren’t going to destroy the world. They’re not even dangerous. At this point, they’re really nothing more than fun toys, advanced learning aids, work helpers, and a way for kids to cheat on their homework, which isn’t ideal but isn’t exactly a 5-star problem either.

In other words, currently, the benefits of AI are likely to dramatically exceed the liabilities. Sounds good, right?

Furthermore, that’s likely to continue to be the case for a while because there are a number of fantastic potential uses of AI that seem to be on the horizon. Significant advances in medical technology, big increases in economic growth driven by improved productivity, personalized service, smart driving cars, incredible new apps, robots with amazing capabilities – it goes on and on. Furthermore, if you live somewhere like America that has the technology and money to take advantage of it, it’s easy to see how AI could even create something akin to a golden age… for a while.

However, it would likely be a golden age with a dark underbelly because AI is likely to create a lot of problems as well.

Things like AI taking over so many jobs that a big chunk of the population would be UNABLE to work. A dramatic increase in income inequality would leave small numbers of people phenomenally rich, while most people would be much poorer. AI would increasingly replace human interaction, including dating for a lot of people. Corporations, social media, and advertising would become orders of magnitude better at hooking and influencing people. Highly advanced drones guided by AI would be capable of killing enormous numbers of people in terrorist attacks. There would also be videos and statements that are so perfectly faked that people will have trouble telling what’s real and what isn’t.

So, is AI going to be “good” or “bad?” Well, the real answer is it’s probably more of a mixed bag than people want to admit. It’s a technology that will have some huge positives, but also some large, more subtle negatives that could have a horrible impact on society as well.

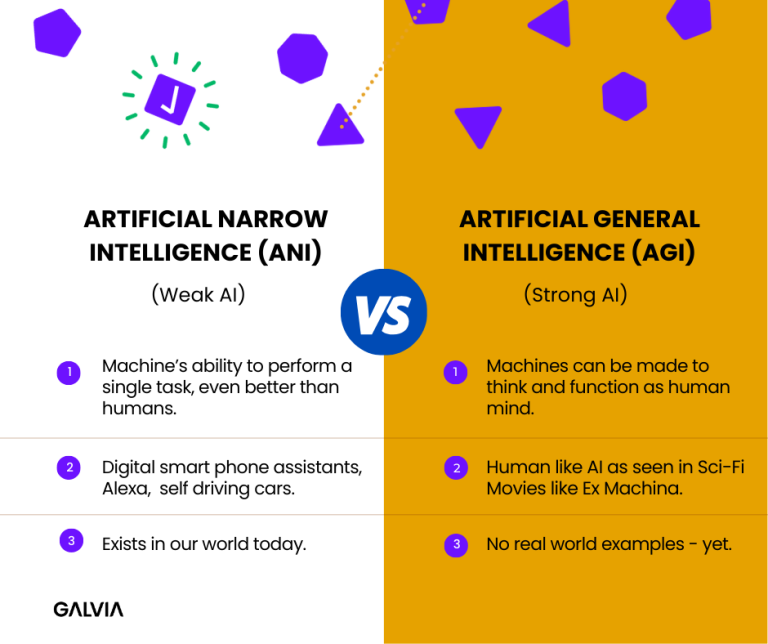

However, one of the biggest goals of people working with AI – and it’s impossible to say at this point if this is something that will happen in a few years or never – is Advanced General Intelligence (AGI). When people talk about the potential of AI ending the human race, this is the danger they’re talking about:

What’s so dangerous about AGI? Well, AI is already smarter than we are in specific areas. If you ask ChatGPT difficult questions about heart surgery, law, and quantum physics, it will give you expert-level answers. However, it has a very narrow focus and limited capabilities.

If it ever comes into being, AGI will not be like this. It will have a wide focus and vast capabilities. It will be an expert in every field, will increasingly outpace humans intellectually, and will be able, at least to a large degree, to have its own goals that may or may not align with ours. Many people envision AGI as something like a genie in a bottle without remembering that in fairy tales and movies, genies often have their own agendas.

Certainly, the agenda can be good, like Will Smith’s Genie in Aladdin:

Of course, it can also be evil, like the Genie from the Wishmaster series:

You also have to consider who’s going to be controlling and programming the first AGI. Could it be China? Maybe. Some ruthless corporation? Maybe some angry, anti-social scientific genius who makes a final breakthrough?

This is critical because think about what we’re discussing here.

We’re talking about creating something that will be FAR smarter and more capable than human beings. It’s also very possible we will not be able to shut it down. In other words, imagine cows creating a human being to help them. They’d probably be thrilled at first, right? “Oh wow, this thing is keeping predators away from us! It’s bringing us food and water! It designed a scratching post! Best invention ever!”

They would probably continue to think that as the fences went up. They’d wonder where their friends were being led, what those new boots the human was wearing were made of, and most importantly, where that delicious looking “steak” the person was eating came from.

You see, many of us are conceited enough to believe that we can create something far smarter than we are and control it. We think we can create rules for it.

Remember Asimov's "Three Laws of Robotics?"

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given to it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

That all sounds very nice, right? But, if it’s smarter than us, more capable than us, and has its own goals, what makes anyone think we can control it, that it will pursue the goals we give it in ways we find acceptable, or even that it will share our goals? With AI, we have control, but once we get to AGI, we will no longer be fully in control. That might still work out fine… or maybe it won’t:

Some people don’t think this is a serious concern. Those people are foolish and naïve.

Even Elon Musk, who is an expert in AI and extremely optimistic about the technology, admits there’s a 20% chance it will lead to the annihilation of humanity:

Of course, even if you agree that AI is a serious danger to humanity, what can we do about it now that it’s being worked on by individuals, corporations, and governments all over the planet?

Well, Tristan Harris, who was one of the earliest voices pointing out the problems being caused by social media, has a suggestion:

The short version is that the whole world has come together in the past to agree on things like the Nuclear Test Ban Treaty and The Geneva Conventions and we should do it again with AI.

Granted, that’s a heavy lift.

Countries that are ahead of the game may not want to give up their advantage. We certainly don’t have any ready-made agreement about what should be done. Also, there will be strong incentives for governments to cheat because it could give them a strategic advantage during a war.

However, we are talking about something here that could quite literally end the human race. If those are the stakes we’re playing for, shouldn’t we at least TRY to see if it’s possible to put some guardrails in place?

If we don’t want to see our species potentially wiped out, this at least sounds like something we should be discussing, right? Maybe it would be futile. Maybe we’re going to have this just play out and see what happens, but don’t kid yourself; we are rolling the dice here on the continued existence of humanity.

I see it unfolding as follows: AI in general will not itself destroy humanity. AI is just like other technologies that replace human labor… but orders of magnitude more job-killing. The move to replace human labor with robots and software will result in people destroying themselves over their anger and frustration for a deficit in life-meaning and purpose that working for a living provides.

This is the missing thing we are not talking about.

The human animal is evolutionarily built to struggle for survival, but then eventually for self actualization. For most of our existence it has been the former dominating our attention. However, self-actualization just happened in this environment of working hard to survive.

By eliminating work, people will flounder and seek their life-meaning, purpose and self-actualization down other paths… and many of those will be destructive.

We see this today… all the violent protests and riots are done by unemployed and under-employed young people.

Our market economy replaced the hunter-gatherer existence… it is a sort of fake-game providing a struggle to survive and thus filling the human psychological need… and doing so in ways that provides productive outcomes to individuals and society as a whole.

We were built for this life struggle. We need struggle to fix our psychology. Take it away and all hell will break loose.

The tech nerds and Wall Street support universal basic income benefits being handed out to people unable to find work. That is a terrible idea. What we need instead are public policy goals, rules and enforcement that restricts or mitigates the loss of jobs that technology enables. We should use a carrot and stick approach… with more carrots. For example, big tax benefits should be provided business that provides good jobs… and extra taxes should be added to companies that utilize robots and software. The tax revenue from that should be redirected to invest in more job creating and retention.

Well, you've got me thinking about it now--I'll be up most of the night considering how to access the narrow path between chaos and dystopia. Thank you for a treatise on a subject that MUST be addressed by humans of the Earth.