Is Artificial Intelligence Going to Destroy the Human Race?

Yes, that’s entirely possible.

Artificial intelligence is more dangerous than nuclear weapons. After all, the last time a nuclear weapon was used was in 1945. On the other hand, AI is being used all over the place now and it will be used EVERYWHERE in the coming decades. Nuclear weapons are considered to be forbidden and scary; they’re the ultimate red line you don’t cross unless you want to risk the destruction of the human race. On the other hand, even schoolchildren are using AI to help with their homework as we speak.

However, most people have trouble getting a handle on the threat of artificial intelligence because it’s still relatively primitive. Before ChatGPT, people were putting out memes like this:

Obviously, ChatGPT is another big step in the right direction. Personally, I’ve done things with it like giving it a half dozen songs or movies I like and asking it to suggest more for me. It did a great job. I asked it to list the greatest mixed martial artists of all time and it gave me a list fairly similar to the one I would have come up with. It’s also great at finding study results, suggesting supplements for particular conditions, and even writing tweets or fairly generic copy in the style of a particular author. It’s also passing tests left and right:

ChatGPT passed law exams in four courses at the University of Minnesota and one at the University of Pennsylvania’s Wharton School of Business (CNN).

UM exams: After answering 95 multiple-choice questions and 12 essay questions, it scored a C+.

Wharton exam: In a business management course, it earned a B to B- grade. According to a paper describing its performance, Christian Terwiesch, a Wharton business professor, said it did “an amazing job” at answering simple questions pertaining to operations management and process-analysis questions.

ChatGPT passes AP English essay (The Wall Street Journal).

One columnist for the WSJ went back to high school for one day to test the chatbot’s ability to survive in a 12th-grade AP literature class. After using it to write a 500- to 1,000-word essay composing an argument “that attempts to situate Ferris Bueller’s Day Off as an existentialist text,” she earned a grade that fell into the B-to-C range.

ChatGPT passes Google coding interview for level three engineer (PC Mag)

Google teams fed the chatbot questions designed to test the skills of a candidate for a level-three engineer position. “Amazingly ChatGPT gets hired at L3 when interviewed for a coding position,” according to an internal document.

ChatGPT scores 1020 on the SAT (Twitter)

One individual challenged the chatbot to take an entire SAT test and it scored in the 52nd percentile, according to data from College Board. It scored a 500 in math and a 520 in reading and writing.

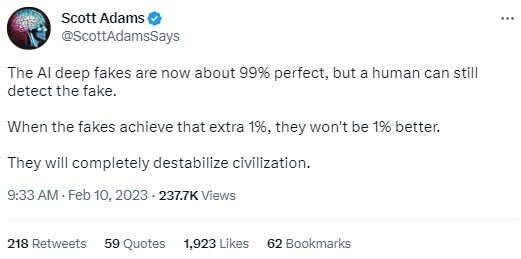

All of this is very impressive, but this is still a fairly simplistic form of artificial intelligence compared to what’s coming. In fact, it makes me think back to something Scott Adams said about deep fakes (“A deepfake is a fraudulent piece of content—typically audio or video—that has been manipulated or created using artificial intelligence.”):

We’re not far from a point where people will be able to create a fake video that’s indistinguishable from the real thing. Think about the potential ramifications of every foreign intelligence service, political operative, activist, criminal, and malicious teenage boy being able to create a video of anyone saying or doing anything that people can’t tell apart from reality. It will be havoc – and it’s very close.

AI is relatively close to passing some dangerous milestones as well. In a sense, it has already surpassed us. How many human beings can pass a medical exam, legal exam, or engineering exam and beat high-level chess players? Probably none – and things are going to keep tilting in that direction. AI is too fast, and it makes too many connections that are not possible for most humans to make without help from a computer. A few snippets of personal data may be enough for it to extrapolate everything from your political leanings to race, to income to what products you’re most likely to buy. How could it not be used for marketing? For targeting voters? By corporations? Consider the speed with which it operates and ask yourself how the military will be able to avoid using it. When a tank, plane, or drone run by AI is much faster, more accurate, and cheaper than having a human in charge, do you think they won’t use it? What happens when one nation finally does, and the only choice is to either use AI or lose? Every military will then move to AI. AI is going to spread EVERYWHERE.

The problem with this is that AI is going to become increasingly used, increasingly spread to every corner of the earth, and will be increasingly smarter than we are. We’re going to be like pre-schoolers trying to figure out how to keep Elon Musk from outsmarting us and taking over. Speaking of Elon Musk, he’s a perfect example of the duality of AI. On the one hand, he reportedly is preparing to begin a major AI project at Twitter, but he also signed a letter calling for a short pause in AI research because it’s so dangerous:

Contemporary AI systems are now becoming human-competitive at general tasks,[3] and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete, and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system's potential effects. OpenAI's recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." We agree. That point is now.

Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt.[4] This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.

Again, we’re talking about a project that has so much potential that corporations and governments almost feel compelled to get on board even though it could lead to humans becoming “obsolete” and we could “risk loss of control of our civilization.” These are not idle threats either. Once AI is woven into our computers, our military, and our infrastructure, all bets are off because we’re not just talking about a computer program, we’re talking about something more akin to an alien intelligence that surpasses our own. Humans being what they are, you can be sure that malevolent AI deliberately designed to hurt us will be created. However, even when we create AI designed to serve us, that doesn’t mean that’s what it will decide to do.

In fact, it reminds me of playing Dungeons and Dragons. I played the game a lot in high school and in my twenties. We had a nice sized crew of people and I remember running a campaign for the group once that featured something called a “wishing imp.” My players were initially thrilled to get their hands on it because it actually gave them whatever they wished for. Sounds great, right? Of course, there was a hitch. The wishing imp twisted every wish to harm the person making it. Just to give you an example of how it worked, one of the first wishes the players made was for a “powerful magic weapon.” It immediately appeared and it was indeed quite powerful. Unfortunately, it happened to belong to an extremely powerful demon who showed up soon after to maul the whole group for “stealing” his weapon. It went on and on like this for a while. The players would make ever more detailed wishes, trying to get around the cursed way the wishing imp twistest their wishes and I’d find a different way to screw them each time.

This could very well be exactly what AI is like. Integrated into everything, capable of running circles around us at will, and… what happens then? How do we treat species dumber than we are? Certainly, some have it better than others. A lot of dogs and cats even have it pretty good. Not all of them though. How about pigs? Cows? Roaches? Termites? We may be building our own replacements and we would be wise to spend more time thinking about how to make sure that’s not the case before we end up like the turkeys in November just starting to realize their “friend” the farmer may not be bringing them more food this time.

I liked the message of "I, Robot" - the Will Smith version. VIKI wasn't inherently evil, she just wanted the safest and most helpful environment for humans. And, anyone who opposed that goal was the Bad One.

Hey, John, what's the deal? My email notification from you had a Gmail security alert with a big bold red box above the title of this post claiming "This message seems dangerous. The sender's account may have been compromised. Avoid clicking links, downloading attachments or replying with personal information. If you know the sender, consider alerting them (but avoid replying to this email). All the embedded stuff was blanked out, I couldn't like, or open any links until I clicked the "looks safe" box in the screaming red warning. Has anyone else reported this to you, or is Gmail messing with you?